An Edit Friendly DDPM Noise Space:

Inversion and Manipulations

| Inbar Huberman-Spiegelglas | Vladimir Kulikov | Tomer Michaeli |

|

Technion - Israel Institute of Technology CVPR 2024 |

| [Paper] | [Supplementary] | [Code] | [Hugging-face demo] |

|

Abstract

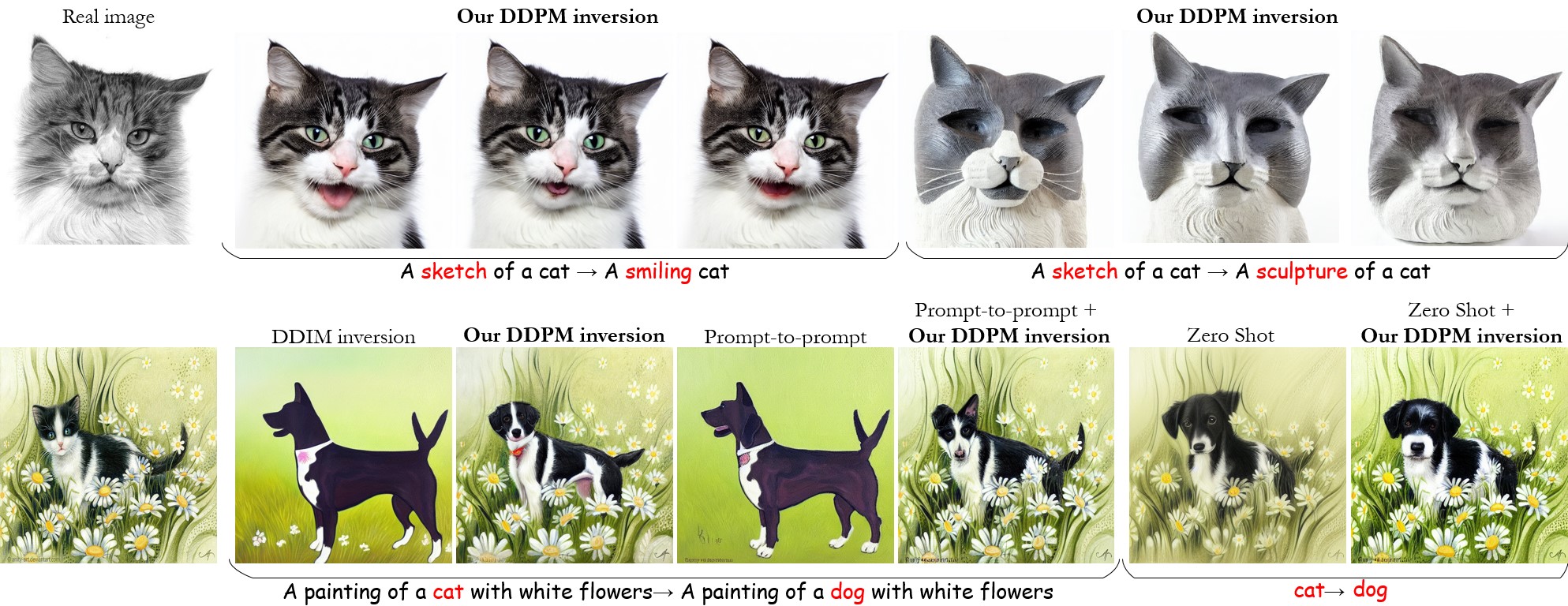

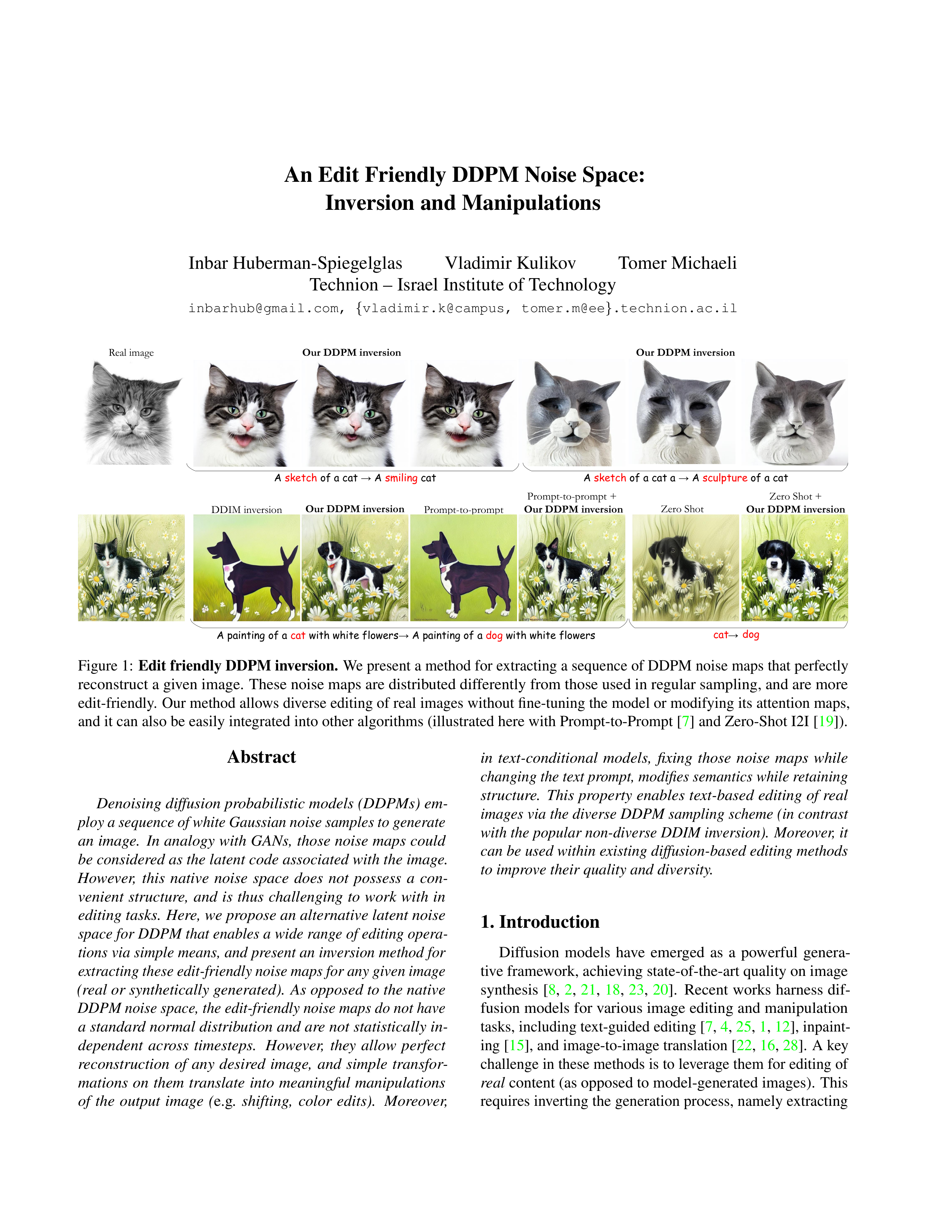

Denoising diffusion probabilistic models (DDPMs) employ a sequence of white Gaussian noise samples to generate an image. In analogy with GANs, those noise maps could be considered as the latent code associated with the generated image. However, this native noise space does not possess a convenient structure, and is thus challenging to work with in editing tasks. Here, we propose an alternative latent noise space for DDPM that enables a wide range of editing operations via simple means, and present an inversion method for extracting these edit-friendly noise maps for any given image (real or synthetically generated). As opposed to the native DDPM noise space, the edit-friendly noise maps do not have a standard normal distribution and are not statistically independent across timesteps. However, they allow perfect reconstruction of any desired image, and simple transformations on them translate into meaningful manipulations of the output image (e.g., shifting, color edits). Moreover, in text-conditional models, fixing those noise maps while changing the text prompt, modifies semantics while retaining structure. We illustrate how this property enables text-based editing of real images via the diverse DDPM sampling scheme (in contrast to the popular non-diverse DDIM inversion). We also show how it can be used within existing diffusion-based editing methods to improve their quality and diversity.

Text Guided Editing of Real Images

Our inversion can be used for text-based editing of real images, either by itself or in combination with other editing methods.

A photo of an old

church

A photo of a wooden

house

A cartoon of a cat

An image of a bear

A photo of a

horse in the field

A photo of a

zebra in the field

An origami of a

hummingbird

A sketch of a

parrot

A scene of a

valley

A scene of a

valley with waterfall

A toy of a jeep

A cartoon of a jeep

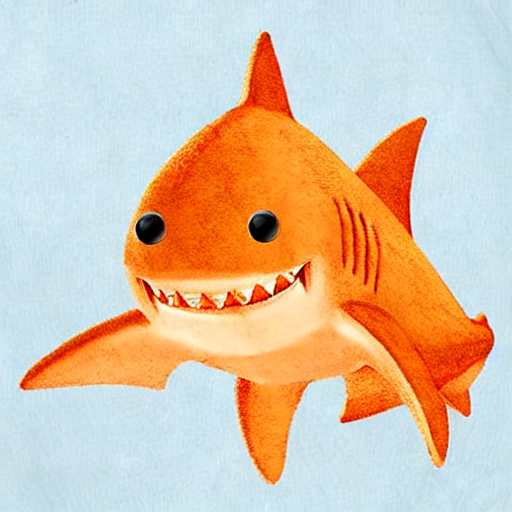

Diversity in Our Method

Due to the stochastic nature of our method, we can generate diverse outputs,

a feature that is not naturally available with

methods relying on the DDIM inversion.

A photo of a car on the side of the street

A photo of a truck on the side of the street

A cartoon of a cat

An origami of a dog

A cartoon of a

castle

An embroidery of a

temple

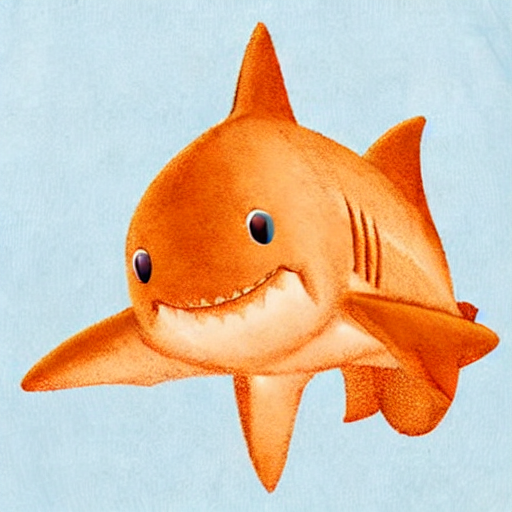

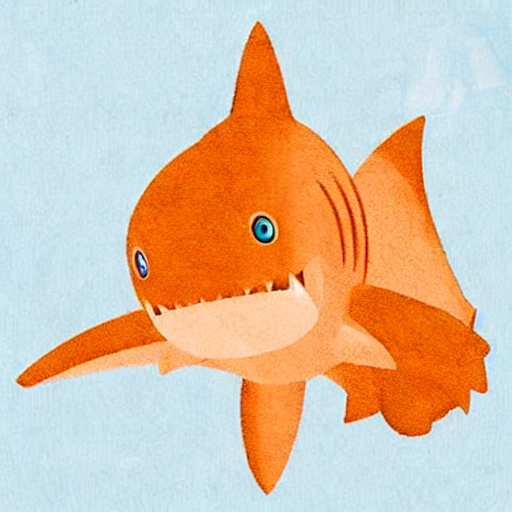

A painting of a

goldfish

A video-game of a

shark

Follow up Works

Zero-Shot Unsupervised and Text-Based Audio Editing Using DDPM Inversion. Hila Manor and Tomer Michael. Using DDPM-inversion to Audio.

Training-Free Consistent Text-to-Image Generation. Yoad Tewel, Omri Kaduri, Rinon Gal, Yoni Kasten, Lior Wolf, Gal Chechik, Yuval Atzmon. Using DDPM-inversion for consistent subject generation.

LEDITS: Real Image Editing with DDPM Inversion and Semantic Guidance. Linoy Tsaban, Apolinário Passos. Integrating DDPM-inversion to SEGA.

|

An Edit Friendly DDPM Noise Space: Inversion and Manipulations. Inbar Huberman-Spiegelglas, Vladimir Kulikov, Tomer Michaeli. [Arxiv] [Paper] |

Bibtex